Use Traefik as a local dev proxy

What the heck is a dev proxy? To clarify, it’s a term that I’m not sure if I use it correctly, but to my understanding, a dev proxy is simply… a reverse proxy used solely for development purposes.

But why do we need one? As microservices become more and more common (I wanted to say popular but it’s highly opinionated), the need to run multiple services locally while developing a project is more necessary than before. And one of the problems is port conflict, a common convention I usually see with microservices is to use almost identical setup. This means that the port they are using is likely the same port. And microservices usually come hand in hand with docker and obviously you can’t bind the same port twice (even if you don’t use docker I don’t think you can run 2 processes listening in the same ip:port)

A dev proxy is a simple solution to mitigate this problem and makes it more enjoyable to work with microservices. In this blog post, I’m gonna describe my process of setting up a local dev proxy so that I can run multiple services without worrying about their port.

Traefik in a nutshell⌗

Despite its unusual name, Traefik proxy (I think they just recently renamed it to Traefik proxy, I remember it was called just Traefik before) is a pretty simple and efficient reverse proxy that works nicely with docker and popular orchestration tools such as k8s or docker swarm mode.

It’s in the same category as HAProxy or Nginx (just the reverse proxy part) or any other reverse proxy tools out there. I pick Traefik simply because I have been using it for quite some time and it’s simple enough to set up and operate.

I won’t cover or explain all the syntax/configuration of Traefik in this blog post because they are most of the time self-explanatory and if in doubt, you can consult their documentation

Set up the proxy⌗

Network⌗

Before we start, we need to make sure all of our docker containers live in the same network, the network itself needs to be created manually

docker network create -d bridge dev-proxy

I’m using the bridge network here (it’s the default option anyway if you don’t specify anything) because we are using a single docker daemon. If you for some reasons have multiple docker daemon setup (docker swarm mode for example), you will need to create an overlay network. But it’s not common to use docker swarm mode for development purposes, so bridge is usually the way.

Now that we have the dev-proxy network, all containers must belong to this network in order for our dev proxy to work.

Traefik service⌗

With the network stuff out of the way, next step is to create a traefik container to receive requests and distribute it to the correct place

version: "3"

services:

proxy:

image: traefik:v2.3

restart: unless-stopped

networks:

- dev-proxy

command: --api.insecure=true --providers.docker

ports:

# The HTTP port

- "80:80"

# The Web UI (enabled by --api.insecure=true)

- "8080:8080"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

networks:

dev-proxy:

external: true

It’s almost self-explanatory, 2 things to note here:

- We need to specify the provider we want to use, here we are using docker so

--providers.docker, Traefik supports a wide range of providers. - We need to mount the docker daemon socket so that Traefik can listen to docker events. This is the auto discovery mechanism of Traefik where it automatically detects new services entering the network without having to reload a configuration file.

Set up other services⌗

Imagine that we have several services in development that needs to talk to each other, or simply running together at the same time. We probably don’t want to have different port for each of them because it might complicate the setup (it’s not good for anyone’s brain to have to remember several different ports a local service might use).

version: "3"

services:

whoami1:

image: containous/whoami

networks:

- dev-proxy

labels:

- traefik.http.routers.whoami1_route.rule=Host(`whoami1.local`)

- traefik.http.routers.whoami1_route.service=whoami1_service

- traefik.http.services.whoami1_service.loadbalancer.server.port=80

networks:

dev-proxy:

external: true

When using docker provider, Traefik relies on labels to detect new services, here we have whoami1 service which should be accessible via whoami1.local (you will have to edit /etc/hosts file to point whoami1.local to 127.0.01) and through port 80. This service also must belong to dev-proxy network (the one we created earlier). Note that there is no port binding here because this service is only accessible through traefik to prevent port conflicts.

We can then have another similar service, both listen on the same internal port 80, this means that the service can use any port it wants, we don’t care, we just need to remember the host (I don’t know about other people but I personally remember text better than numbers, fun fact I am mixing up numbers all the time, both in English or my native language)

version: "3"

services:

whoami2:

image: containous/whoami

networks:

- dev-proxy

labels:

- traefik.http.routers.whoami2_route.rule=Host(`whoami2.local`)

- traefik.http.routers.whoami2_route.service=whoami2_service

- traefik.http.services.whoami2_service.loadbalancer.server.port=80

networks:

dev-proxy:

external: true

Now we can safely and conveniently run both of them at the same time.

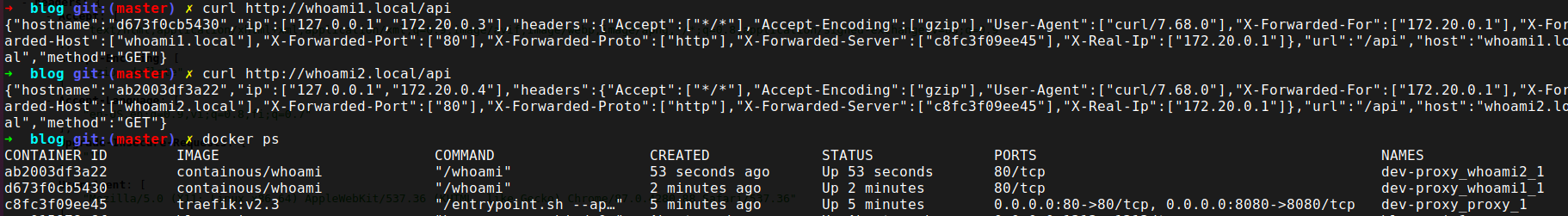

Here we can see that we have 2 docker containers running, and each domain returns a different container ID.

How about database instances?⌗

Traefik can proxy TCP connections just fine but it depends on each database if they support SNI ( Server Name Indication. Simply put, a unique domain) in their TLS handshake or not, and postgresql (my go-to database for most stuff) unfortunately doesn’t support it. Without SNI, we will need to define a different port for each database instance, and it defeats the purpose of using a dev proxy.

Alternatively, we can set up some kind of web UI for connecting to the databse, for example pgadmin or simply avoid using the same port. I will probably write something about this in the future to share my database setup.

How would the development flow look like?⌗

If you haven’t run the dev proxy, you need to first start it, and then develop individual projects normally, but instead of going to localhost:5000 (replace 5000 with whatever port you usually use), you can now go to a custom domain your-project.dev (of course this needs to be updated in /etc/hosts file). If you have run it at least once before, no need to do anything because docker will make sure it’s started when the docker daemon starts.

Now when you add a new service, you need to do:

- update

/etc/hoststo have a new domain - add correct labels to your docker-compose file

- profits

Alternative setup⌗

Another alternative setup that I used in the past is to “bundle” the dependent services in the same docker-compose file. This setup is simpler and doesn’t require a dev proxy because each service can now be run independently because it pulls the latest docker image of the dependent services and start them altogether.

The downside is that if I want to develop 2 services side by side, it takes more time and effort. One service needs to be fully developed and “published” (to a docker registry be it locally or a centralized hub) before the other one can actually use it. This can be a bad or good thing depending on various factors. I personally see it as a not-so-good way to do it because now one service unnecessarily knows too much about other services. The only thing services should know about each other is their API. They don’t need to know how the API is being implemented or care about its dependencies, it’s up to each individual service to decide that.

For example if I have service A depending on service B. And I want to put both A and B in the same docker-compose file, I have to know how exactly B is set up (including what kind of database it uses, does it have any cache etc…) and that’s not gonna end well if you have a lot of services. B can in the future change the database it uses and at that point, all services depending on B will have to update their docker-compose file to use the new database. It’s gonna be a mess, trust me, I’ve been there and done that.