Different ways to protect your API

How to authenticate requests to your API is a common question I usually have to answer whenever we want to build new API services. Over the years, the techniques to do authentication have changed significantly as well as the overall system architecture. In this post, I’m going to revisit all the different approaches that I have encountered in the past and share my thoughts regarding each approach.

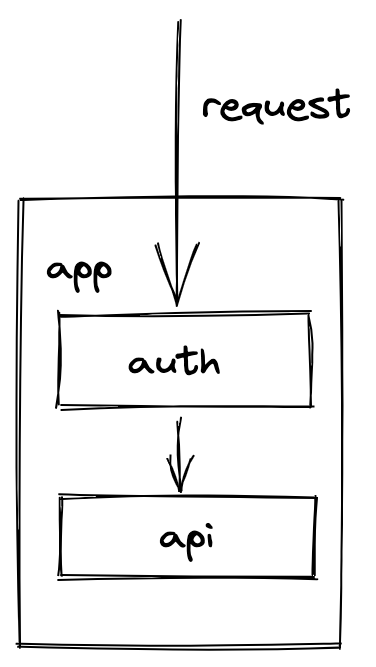

One big monolith⌗

When I wrote my very first web API, monolith was the norm back then and most popular frameworks (Zend, Symfony, Rails, Django etc…) bundle an authentication and authorization layer within the framework itself

It is the most straightforward form of authentication and authorization, before the requests hit the actual API part, they always have to go through an auth layer, and they only hit the API if they are authenticated and authorized to do so.

This is most of the time the first choice when someone wants to start a project, they just pick a framework, follow whatever auth practices that the framework is using and call it a day. There is really no right and wrong answer here, we all made choices based on the current circumstances, and we can only know if it’s the correct decision or not months later.

I personally don’t like this approach because it ties me to the monolith way of doing things and down the road will make it harder to split the big codebase into smaller chunks (microservices) when the time comes. There are of course tools to deal with monolith but I haven’t encountered any monolith codebase that I really want to work with (yet).

Then comes the microservice architecture⌗

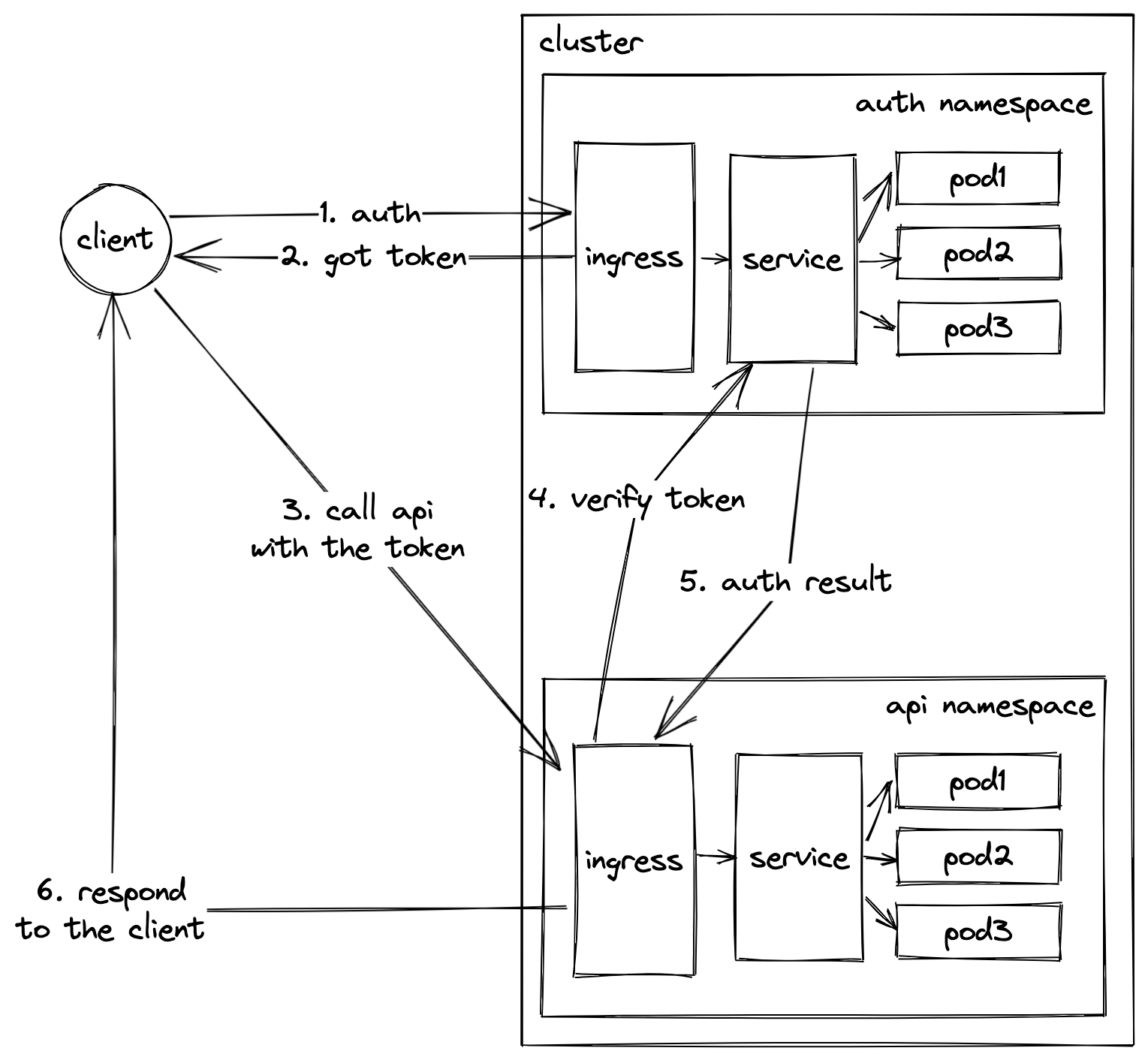

After the monolith era, there was a growing trend of microservice architecture as software systems became a lot bigger and so do software development teams. In a microservice architecture, the authentication and authorization (I will group both into just auth although they are really different in their nature) are usually handled in a centralized service. This separation opens up a lot of different ways to do auth.

The common flow is that the client (after being authenticated) is granted some kind of token (for example OAuth access token) that it will have to include in every subsequent requests made to the API.

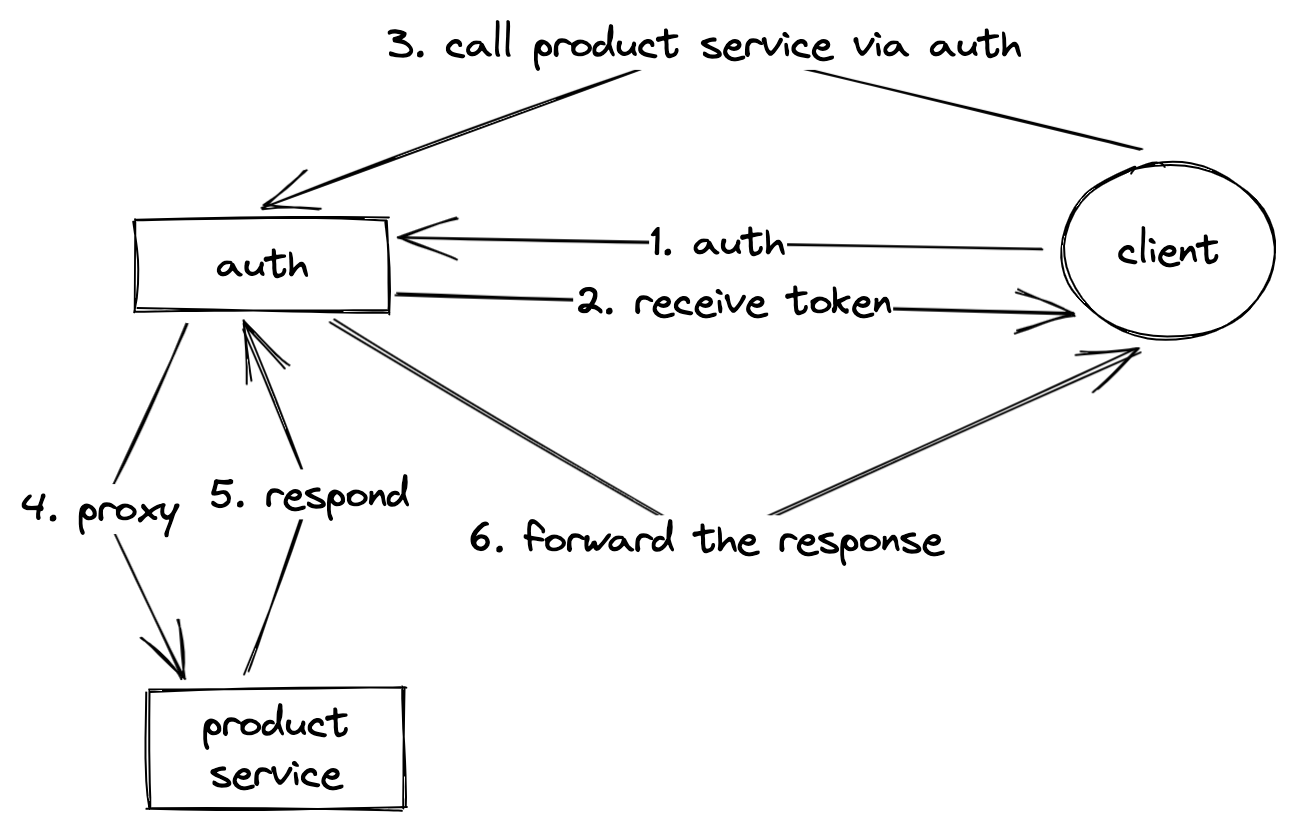

Proxy requests via the auth service⌗

In this approach, the auth service also acts as a proxy, so instead of calling the API directly the client would have to go through the auth service.

This is somewhat similar to the monolith structure but split into 2 different and distinct components. The biggest problem of this approach is that the auth service becomes a single point of failure. If something ever happens to it, all access to the API will be interrupted.

On the other hand, this approach encapsulates the auth logic nicely from the actual services, they don’t need to know anything about the auth logic which makes development and testing a lot easier.

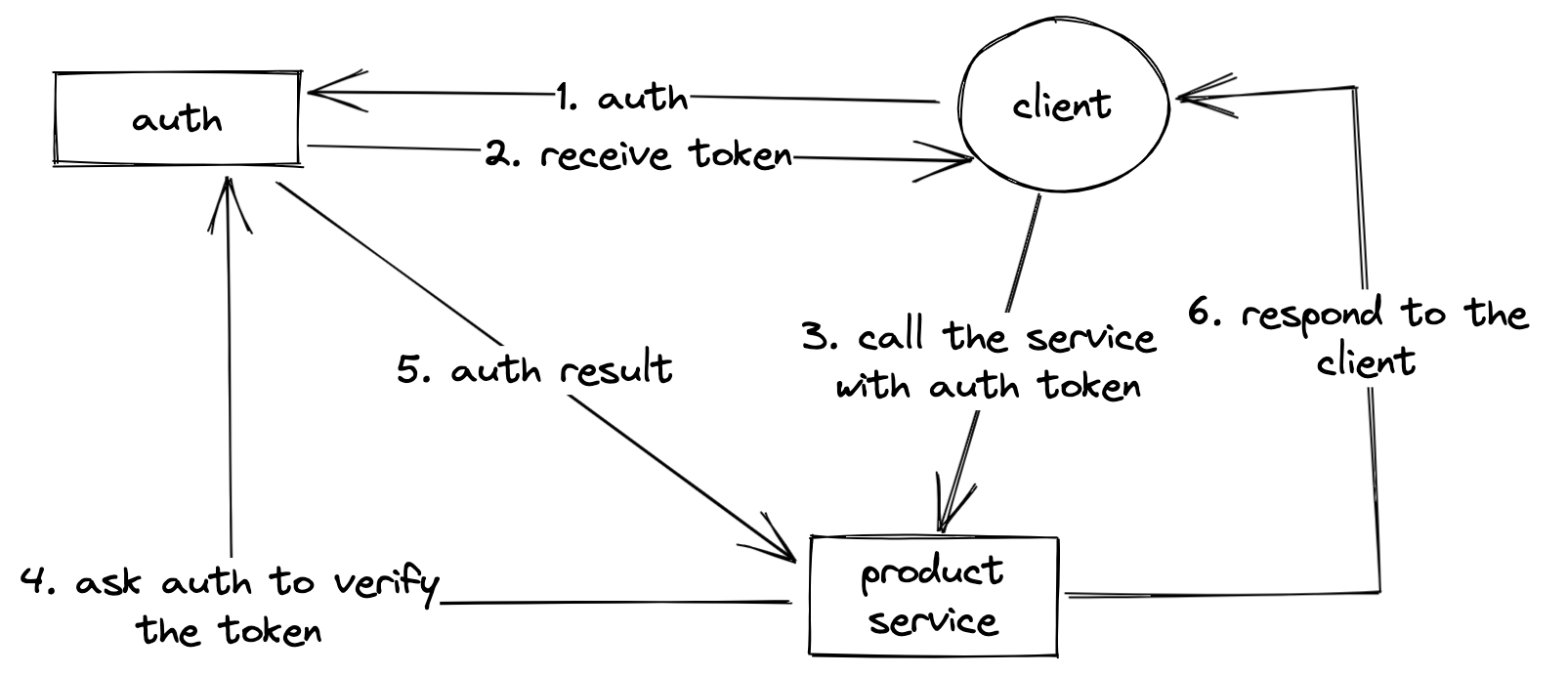

Auth as a middleware in each individual service⌗

In this approach, each individual service implements a thin middleware that calls the auth service to authenticate the incoming requests.

This approach maintains a high level of separation between services, and mitigate the impact of the auth service being offline. This is of course a whole different topic since each service now has to fallback to something else to do authenticate the requests in case the auth service doesn’t respond.

However, this approach results in either having a common place for storing the auth middleware logic or copy/paste it all over the place. And when we take into account one of the advantages of the microservice architecture is to give each service the freedome to use whatever tech stack. So in any case, this auth middleware will be duplicated in one form or another.

There are many other variants but most come down to when does the auth happens, before it reaches the destination or after it has arrived at the destination.

Entering the cloud era⌗

When systems become more and more complex with a lot of moving parts, the way we manage systems also change. In the past, deployments were basically grab the source code, send it to the server (the mighty FTP approach, or SCP if you fancy), and run. Well, of course we were not doing all of that manually but instead via some automation tools.

With the invention of container technologies and container orchestration, they forever change how we deploy web services, and with that, many patterns emerge. During my trip to the cloud, I have also encountered various authentication setups. Although they are new and shiny and all but the core ideas are basically the same: at which point do we “intercept” the requests and run auth logic.

The following sections assume that you are familiar with basic k8s concepts such as ingress, service, deployment and pod. I will only describe the concept in k8s term because it’s what I am most familiar with, but the same ideas can probably be used with a different container orchestration.

Auth happens at the ingress controller⌗

Ingress controller is where your external traffic is “converted” to internal calls to different k8s services. It’s quite common for many API gateways to also provide their own implementation of the k8s ingress controller with plugins/extensions/middlewares to intercept the requests for authentication.

With this setup, the application services are not aware of the auth service, instead they can assume that if the request has arrived, it has a valid identity. This is similar to proxying the request via the auth service when deploying without k8s. This approach requires the ingress to have the capability to forward the request to an auth service or perform the authentication by itself. By default the ingress specification doesn’t contain this functionality so most ingress controllers have their own way of doing this.

This setup is straightforward but it depends a lot on the ingress class, some have better support than the others. It also nicely mitigates a single point of failure because imagine if we have a lot of services, each has its own ingress class, the traffic will be spread across different ingress. However, it’s obvious that the auth service is still a bottleneck, single point of failure. There are ways to mitigate that as well but it’s outside the scope of this post.

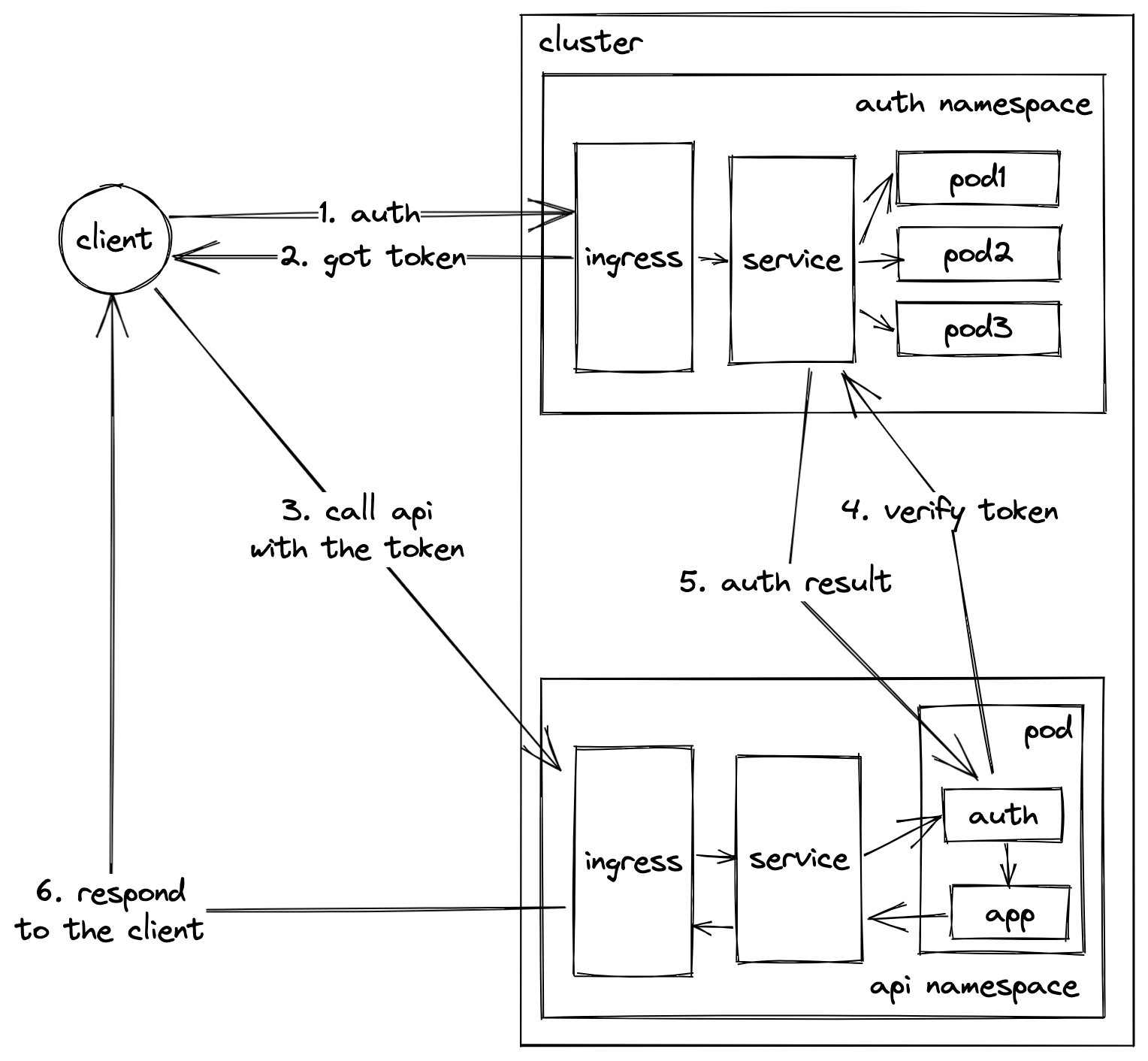

Auth happens at a sidecar container⌗

Sidecar containers are a common pattern found in most service mesh implementations. The idea is to have a separate container running right next to the main application container in the same pod. This sidecar container then intercepts all the network coming in and out of the application container and do something with it (basically almost a proxy).

With that simplified definition out of the way, how can we fit auth here? The idea is similar to that of using an ingress controller, the sidecar container intercepts the requests, and perform necessary auth check before forwarding it to the application container.

Although this approach looks similar to the ingress controller approach in the sense that they both intercept the requests before forwarding them to the application container. Sidecar containers are a completely different beast

- There are more than 1 sidecar container per pod as opposed to only 1 ingress controller per namespace => sidecar containers require more resources

- Sidecar containers must be injected into each pod either automatically by some kind of injector or manually via the relevant deployment manifest => more complex setup than using an ingress controller

However, sidecar container opens up a lot more possibilities because we can implement it however we want (and in whatever tech stack), there are also ready-made solutions as well. It also makes the architecture more flexible because we don’t have to depend on a specific ingress implementation.

But that’s not everything!⌗

Although I have to deal with this kind of decision frequently during my career, there are considerably more to the topic than I can possibly wrap my head around. When it comes to software engineering, it’s a never ending cycle of building and improving your architecture. With many interesting technologies coming up, I’m intrigued by what’s to come.